分布式检查点(DCP)入门¶

Created On: Oct 02, 2023 | Last Updated: May 08, 2025 | Last Verified: Nov 05, 2024

作者:Iris Zhang, Rodrigo Kumpera, Chien-Chin Huang, Lucas Pasqualin

先决条件:

在分布式训练期间检查点AI模型可能会很困难,因为参数和梯度在训练器之间分区,而当您恢复训练时可用的训练器数量可能会改变。Pytorch分布式检查点(DCP)可以帮助简化这个过程。

在本教程中,我们展示了如何使用DCP API处理一个简单的FSDP封装模型。

DCP如何工作¶

torch.distributed.checkpoint() 支持并行从多个进程保存和加载模型。您可以使用该模块在任意数量的进程中进行并行保存,然后在加载时重新划分到不同的集群拓扑中。

此外,通过使用 torch.distributed.checkpoint.state_dict() 模块,DCP支持在分布式环境中优雅地处理 state_dict 的生成和加载。这包括管理跨模型和优化器的完全限定名称(FQN)映射,并设置PyTorch提供的并行默认参数。

DCP与 torch.save() 和 torch.load() 在以下几个重要方面不同:

它对每个检查点生成多个文件,至少每个进程一个。

它在原地操作,意味着模型首先需要分配数据,然后DCP使用该存储空间。

DCP对状态对象提供特殊处理(在 torch.distributed.checkpoint.stateful 中正式定义),如果定义了 state_dict 和 load_state_dict 方法,会自动调用它们。

备注

本教程中的代码运行在一个8-GPU服务器上,但可以轻松推广到其他环境。

如何使用DCP¶

在这里我们使用一个封装了FSDP的玩具模型进行演示。类似地,这些API和逻辑可以应用于更大的模型检查点。

保存¶

现在,让我们创建一个玩具模块,用FSDP封装它,用一些虚拟输入数据来喂养它并保存它。

import os

import torch

import torch.distributed as dist

import torch.distributed.checkpoint as dcp

import torch.multiprocessing as mp

import torch.nn as nn

from torch.distributed.fsdp import FullyShardedDataParallel as FSDP

from torch.distributed.checkpoint.state_dict import get_state_dict, set_state_dict

from torch.distributed.checkpoint.stateful import Stateful

from torch.distributed.fsdp.fully_sharded_data_parallel import StateDictType

CHECKPOINT_DIR = "checkpoint"

class AppState(Stateful):

"""This is a useful wrapper for checkpointing the Application State. Since this object is compliant

with the Stateful protocol, DCP will automatically call state_dict/load_stat_dict as needed in the

dcp.save/load APIs.

Note: We take advantage of this wrapper to hande calling distributed state dict methods on the model

and optimizer.

"""

def __init__(self, model, optimizer=None):

self.model = model

self.optimizer = optimizer

def state_dict(self):

# this line automatically manages FSDP FQN's, as well as sets the default state dict type to FSDP.SHARDED_STATE_DICT

model_state_dict, optimizer_state_dict = get_state_dict(self.model, self.optimizer)

return {

"model": model_state_dict,

"optim": optimizer_state_dict

}

def load_state_dict(self, state_dict):

# sets our state dicts on the model and optimizer, now that we've loaded

set_state_dict(

self.model,

self.optimizer,

model_state_dict=state_dict["model"],

optim_state_dict=state_dict["optim"]

)

class ToyModel(nn.Module):

def __init__(self):

super(ToyModel, self).__init__()

self.net1 = nn.Linear(16, 16)

self.relu = nn.ReLU()

self.net2 = nn.Linear(16, 8)

def forward(self, x):

return self.net2(self.relu(self.net1(x)))

def setup(rank, world_size):

os.environ["MASTER_ADDR"] = "localhost"

os.environ["MASTER_PORT"] = "12355 "

# initialize the process group

dist.init_process_group("nccl", rank=rank, world_size=world_size)

torch.cuda.set_device(rank)

def cleanup():

dist.destroy_process_group()

def run_fsdp_checkpoint_save_example(rank, world_size):

print(f"Running basic FSDP checkpoint saving example on rank {rank}.")

setup(rank, world_size)

# create a model and move it to GPU with id rank

model = ToyModel().to(rank)

model = FSDP(model)

loss_fn = nn.MSELoss()

optimizer = torch.optim.Adam(model.parameters(), lr=0.1)

optimizer.zero_grad()

model(torch.rand(8, 16, device="cuda")).sum().backward()

optimizer.step()

state_dict = { "app": AppState(model, optimizer) }

dcp.save(state_dict, checkpoint_id=CHECKPOINT_DIR)

cleanup()

if __name__ == "__main__":

world_size = torch.cuda.device_count()

print(f"Running fsdp checkpoint example on {world_size} devices.")

mp.spawn(

run_fsdp_checkpoint_save_example,

args=(world_size,),

nprocs=world_size,

join=True,

)

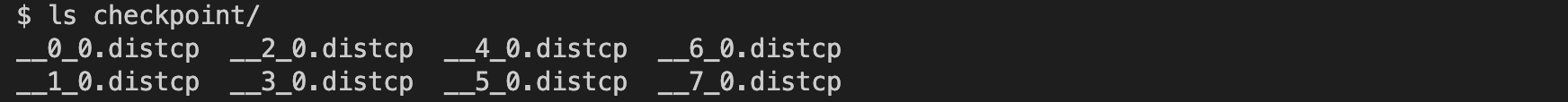

请继续检查 checkpoint 目录。您应该会看到如下所示的8个检查点文件。

加载¶

保存后,我们创建一个相同的FSDP封装模型,并从存储中加载保存的状态字典到模型中。您可以在相同的世界大小或不同的世界大小加载数据。

请注意,您需要在加载之前调用 model.state_dict() 并将其传递给DCP的 load_state_dict() API。这与 torch.load() 基本不同,因为 torch.load() 仅要求加载之前提供检查点的路径。我们需要在加载之前的 state_dict 的原因是:

DCP使用模型状态字典中预分配的存储加载检查点目录数据。在加载期间,传入的状态字典将被原位更新。

DCP需要模型的分片信息支持加载时的重新分片。

import os

import torch

import torch.distributed as dist

import torch.distributed.checkpoint as dcp

from torch.distributed.checkpoint.stateful import Stateful

from torch.distributed.checkpoint.state_dict import get_state_dict, set_state_dict

import torch.multiprocessing as mp

import torch.nn as nn

from torch.distributed.fsdp import FullyShardedDataParallel as FSDP

CHECKPOINT_DIR = "checkpoint"

class AppState(Stateful):

"""This is a useful wrapper for checkpointing the Application State. Since this object is compliant

with the Stateful protocol, DCP will automatically call state_dict/load_stat_dict as needed in the

dcp.save/load APIs.

Note: We take advantage of this wrapper to hande calling distributed state dict methods on the model

and optimizer.

"""

def __init__(self, model, optimizer=None):

self.model = model

self.optimizer = optimizer

def state_dict(self):

# this line automatically manages FSDP FQN's, as well as sets the default state dict type to FSDP.SHARDED_STATE_DICT

model_state_dict, optimizer_state_dict = get_state_dict(self.model, self.optimizer)

return {

"model": model_state_dict,

"optim": optimizer_state_dict

}

def load_state_dict(self, state_dict):

# sets our state dicts on the model and optimizer, now that we've loaded

set_state_dict(

self.model,

self.optimizer,

model_state_dict=state_dict["model"],

optim_state_dict=state_dict["optim"]

)

class ToyModel(nn.Module):

def __init__(self):

super(ToyModel, self).__init__()

self.net1 = nn.Linear(16, 16)

self.relu = nn.ReLU()

self.net2 = nn.Linear(16, 8)

def forward(self, x):

return self.net2(self.relu(self.net1(x)))

def setup(rank, world_size):

os.environ["MASTER_ADDR"] = "localhost"

os.environ["MASTER_PORT"] = "12355 "

# initialize the process group

dist.init_process_group("nccl", rank=rank, world_size=world_size)

torch.cuda.set_device(rank)

def cleanup():

dist.destroy_process_group()

def run_fsdp_checkpoint_load_example(rank, world_size):

print(f"Running basic FSDP checkpoint loading example on rank {rank}.")

setup(rank, world_size)

# create a model and move it to GPU with id rank

model = ToyModel().to(rank)

model = FSDP(model)

optimizer = torch.optim.Adam(model.parameters(), lr=0.1)

state_dict = { "app": AppState(model, optimizer)}

dcp.load(

state_dict=state_dict,

checkpoint_id=CHECKPOINT_DIR,

)

cleanup()

if __name__ == "__main__":

world_size = torch.cuda.device_count()

print(f"Running fsdp checkpoint example on {world_size} devices.")

mp.spawn(

run_fsdp_checkpoint_load_example,

args=(world_size,),

nprocs=world_size,

join=True,

)

如果您想在非分布式设置中将已保存的检查点加载到非FSDP封装模型中,可能用于推理,您也可以使用DCP。默认情况下,DCP以单程序多数据(SPMD)风格保存和加载分布式 state_dict。但是如果未初始化任何进程组,则DCP会推断出意图是在”非分布式”风格中进行保存或加载,即完全在当前进程内。

备注

对多程序多数据格式的分布式检查点支持仍在开发中。

import os

import torch

import torch.distributed.checkpoint as dcp

import torch.nn as nn

CHECKPOINT_DIR = "checkpoint"

class ToyModel(nn.Module):

def __init__(self):

super(ToyModel, self).__init__()

self.net1 = nn.Linear(16, 16)

self.relu = nn.ReLU()

self.net2 = nn.Linear(16, 8)

def forward(self, x):

return self.net2(self.relu(self.net1(x)))

def run_checkpoint_load_example():

# create the non FSDP-wrapped toy model

model = ToyModel()

state_dict = {

"model": model.state_dict(),

}

# since no progress group is initialized, DCP will disable any collectives.

dcp.load(

state_dict=state_dict,

checkpoint_id=CHECKPOINT_DIR,

)

model.load_state_dict(state_dict["model"])

if __name__ == "__main__":

print(f"Running basic DCP checkpoint loading example.")

run_checkpoint_load_example()

格式¶

尚未提到的一个缺点是,DCP以与使用torch.save生成的检查点格式本质上不同的格式保存检查点。当用户希望与习惯torch.save格式的其他用户分享模型时,或者在一般情况下想为应用增加格式灵活性时,这可能会成为一个问题。对于这种情况,我们提供了 torch.distributed.checkpoint.format_utils 中的 format_utils 模块。

提供了一个命令行工具供用户使用,其格式如下:

python -m torch.distributed.checkpoint.format_utils <mode> <checkpoint location> <location to write formats to>

在上述命令中,mode 是 torch_to_dcp 或 dcp_to_torch 之一。

或者,对于希望直接转换检查点的用户,也提供了相应的方法。

import os

import torch

import torch.distributed.checkpoint as DCP

from torch.distributed.checkpoint.format_utils import dcp_to_torch_save, torch_save_to_dcp

CHECKPOINT_DIR = "checkpoint"

TORCH_SAVE_CHECKPOINT_DIR = "torch_save_checkpoint.pth"

# convert dcp model to torch.save (assumes checkpoint was generated as above)

dcp_to_torch_save(CHECKPOINT_DIR, TORCH_SAVE_CHECKPOINT_DIR)

# converts the torch.save model back to DCP

torch_save_to_dcp(TORCH_SAVE_CHECKPOINT_DIR, f"{CHECKPOINT_DIR}_new")

结论¶

总之,我们学习了如何使用DCP的 save 和 load API,以及它们与 torch.save 和 torch.load 的不同。此外,我们还学习了如何使用 get_state_dict 和 set_state_dict 在状态字典生成和加载期间自动管理特定于并行的FQN和默认值。

欲了解更多信息,请参见以下内容: